Supporting Developers So They Can Build More Responsible AI

Impact: We developed new toolkits and methods to help AI developers

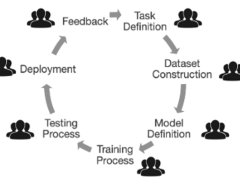

To achieve more responsible artificial intelligence (AI) systems, we need to support the industry practitioners building them.

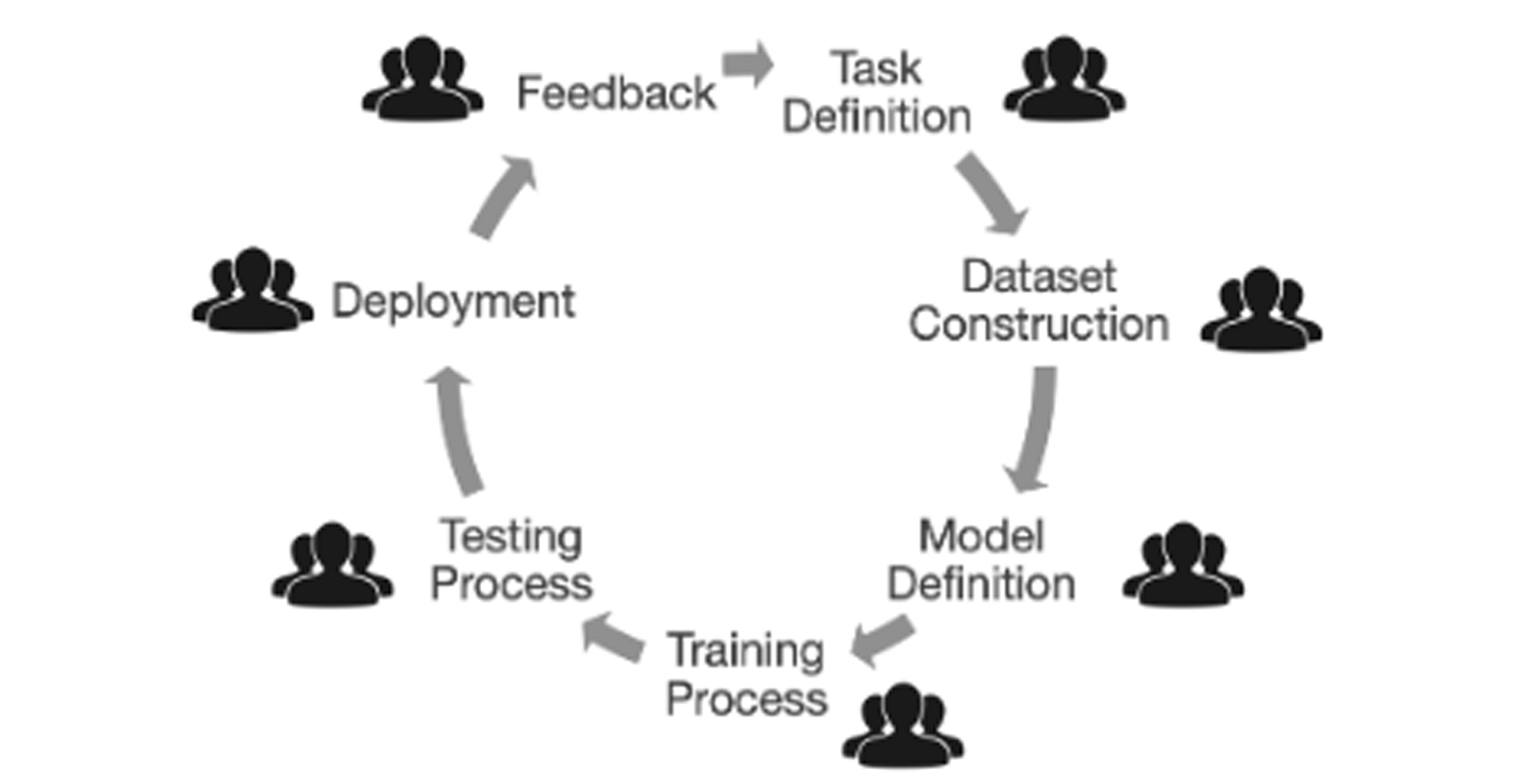

Our research has directly informed the development of new practical methods and software toolkits for responsible AI. Fairness toolkits support and empower developers to build more equitable and responsible AI systems by making fairness a central part of the development lifecycle. These toolkits and methods are used by AI developers worldwide.

We conducted the first studies to understand what challenges AI developers face when attempting to develop responsible AI systems, resulting in new methods and tools that are more useful in practice.

The findings of this work led to...

- The development of Fairlearn, an open-source, community-driven project to help data scientists improve fairness of AI systems. Fairlearn currently has about 100 contributors, spanning multiple technology companies and universities across the world. This research also informed the design of responsible AI toolkits, guidelines, and playbooks, such as FairCompass, ABOUT ML, The Situate AI Guidebook and The Generative AI Ethics Playbook.

- Improve the usability and usefulness of existing responsible AI toolkits, such as IBM’s AI Fairness 360 toolkit.

- The development of several new algorithmic methods that can assess and mitigate unfairness in AI systems even when individual demographic data is unavailable. This is a common situation in many real-world settings where organizations may be prohibited from collecting or using certain demographic data.

- New software toolkits and platforms for responsible AI development, including Zeno, WeAudit, and Policy Projector.

- Through our team’s discussions with companies such as OpenAI, Microsoft, Google and UL, this research informed approaches to user engagement in AI red teaming, testing, and auditing in industry.

- Inform reports, such as the National Telecommunications and Information Administration (NTIA)’s report on “Artificial intelligence accountability policy.”

Supported by: The National Science Foundation (NSF), Microsoft Research, Apple, PwC, Amazon, IBM, K&L Gates, and the CMU Block Center for Technology and Society

Timing: This line of research started in 2018 and is ongoing.

Related work:

- "Improving Fairness in Machine Learning Systems: What Do Industry Practitioners Need?"

- "Exploring How Machine Learning Practitioners (Try To) Use Fairness Toolkits"

- "Understanding Practices, Challenges, and Opportunities for User-Engaged Algorithm Auditing in Industry Practice"

- "Zeno: An Interactive Framework for Behavioral Evaluation of Machine Learning"

- "Investigating Practices and Opportunities for Cross-functional Collaboration around AI Fairness in Industry Practice"

- "Investigating How Practitioners Scope, Motivate, and Conduct Privacy Work When Developing AI Products" (pdf)

- "The Situate AI Guidebook: Co-Designing a Toolkit to Support Multi-Stakeholder, Early-stage Deliberations Around Public Sector AI Proposals"

- "WeAudit: Scaffolding User Auditors and AI Practitioners in Auditing Generative AI"

- "AI Policy Projector: Grounding LLM Policy Design in Iterative Mapmaking"

- "AI Mismatches: Identifying Potential Algorithmic Harms Before AI Development"

Researchers: Jeff Bigham, Alex Cabrera, Sauvik Das, Wesley Deng, Motahhare Eslami, Jodi Forlizzi, Hoda Heidari, Ken Holstein, Jason Hong, Ji-Youn Jung, Anna Kawakami, Mary Beth Kery, Hank Lee, Michael Madaio, Dominik Moritz, Adam Perer, Hong Shen, Zhiwei Steven Wu, Nur Yildirim, Haiyi Zhu, John Zimmerman

Research Areas: Human-Centered AI, Tools and Programming, Responsible Computing, Ethics and Policy

Looking at Additional HCII Impacts...

NoRILLA Interactive Mixed-Reality Science for Kids

Our novel, mixed-reality intelligent science stations bridge the physical and virtual worlds. Millions of children and families across the US are learning more science and improving their critical thinking skills after predicting, observing and explaining experiments with our patented system at their schools and museums.

Protecting Millions of People from Phishing Scams

We studied the social aspects of phishing attacks and protected millions of people through education and training. This work led to new educational methods to raise awareness, effective anti-phishing warnings, and algorithmic detection of phishing attacks.

Tiramisu Supported Public Transit Riders and Research

Years before the public transit buses in Pittsburgh had GPS location trackers, we were exploring how riders could be the co-creators of mobile transit info. During this 10 year research project, this app made it easier for travelers with disabilities to engage in opportunistic travel and benefited 75,000 unique transit riders.

Developing Novel Interfaces for Livestreamed Gaming

Livestream gaming viewers are limited in the ways they can participate, but this team found that designing a better experience for livestreams has a variety of benefits for audiences worldwide.

Creating Accessible Visualizations with Chartability

The Chartabilty framework has empowered people around the world to recognize the parts of a data visualization that produce barriers for people with disabilities. It has contributed to dozens of design languages, visualization guides, accessibility curriculum at universities, and the data journalism seen on news websites.

TapSense Improved Touch Detection on Millions of Smartphones

We trained smartphones to reliably detect four different touchscreen inputs – for example, a tap by a finger pad as compared to a knuckle – which created opportunities for new interactions and features.

Supporting Developers So They Can Build More Responsible AI

We conducted the first studies with industry practitioners to understand the challenges they face when building responsible AI systems. Our tools and methods are now supporting developers around the world.

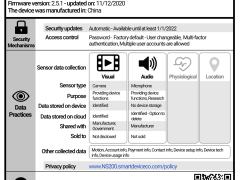

Grading Your Smartphone Apps on Their Privacy Practices

We created a model that analyzed over 1 million smartphone apps on their privacy and data collection practices, and then assigned them a public-facing privacy grade. This easy-to-follow grading system raised public awareness and led to improved privacy practices from several app developers.

Improved Math Mastery with the Decimal Point Game

We explored the learning analytics behind our digital learning game to see how student learning responds to curriculum changes to the game. Over the past 10 years, over 1,500 students have benefited from the Decimal Point game and curriculum materials.

Understanding the Impacts of AI in Child Welfare

We conducted the first field studies to understand how child welfare workers incorporate AI-based recommendations into their decision-making, which had far-reaching policy impacts.

Designing Teacher-AI Collaboration Systems

Hundreds of elementary and middle school students across the US have learned more as a result of our work on real-time, teacher-AI collaboration systems in K-12 classrooms.

Millions Learned More Math with Our AI Tutor

Millions of middle school and high school students across the US have learned more math as a result of our decades of work with AI-based cognitive tutoring systems.

Human-Centered Privacy for the Internet of Things

As more gadgets become “smart” things and the Internet of Things (IoT) expands to tens of billions of connected devices, we want consumers to be aware of what their devices are doing with their personal data.

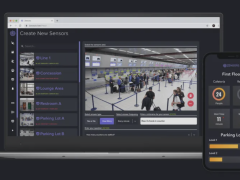

Zensors Turns Cameras Into Smart Sensors

In one application installed at the Pittsburgh International Airport in 2019, Zensors use existing cameras as powerful general-purpose sensors to provide wait time estimates for the security line, which benefits almost 10M PIT travelers per year.

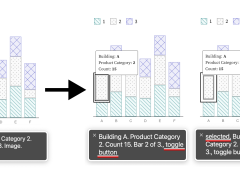

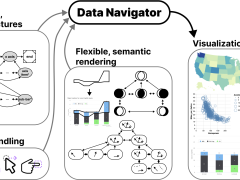

Accessible Visualization Tooling with Data Navigator

A visual labeled as “an image of a bar chart” or that requires a mouse hover to display important info does not support all users. We created the Data Navigator system to give developers a flexible foundation for designing and building more inclusive data experiences.