Grading Your Smartphone Apps on Their Privacy Practices

Impact: Our public-facing privacy grade raised awareness of app data collection

Perhaps you have uninstalled a smartphone app after becoming aware of the sensitive data being collected, and if so, you’re not alone.

Studies have shown that the general public has serious concerns about the privacy – or lack thereof – of their smartphone apps, and we saw an opportunity to raise awareness when the expectations of data privacy do not meet reality. Our team created PrivacyGrade.org, which assigned letter grades to over 1 million smartphone apps, and led to improved privacy practices from several app developers.

We created a model that analyzed smartphone apps on their privacy and data collection practices, and then assigned them a public-facing privacy grade.

This work led to...

- The PrivacyGrade.org website. We created a model that evaluated the apps’ data collection practices, took into account when app collection went too far (we’re looking at you, flashlight apps that use GPS), and assigned a letter grade. This approachable way of grading apps resonated with the public when the work was featured on CNN, NPR, CNBC, BBC, Wired, New York Times, and more.

- Improvements with Android. Our team shared PrivacyGrade data with eight different research teams, including researchers at Google, for evaluating privacy of apps on their Play Store. We also helped with the design and implementation of Privacy-Enhancements for Android, an open source variant of Android with specific features for privacy.

- Speaking opportunities to raise awareness of our findings. Our team gave multiple talks to researchers and developers at Google, Apple, Facebook, Intel, and Samsung, as well as to the FTC and the Congressional Internet Caucus on our work on analyzing privacy of smartphone apps. This work helped lead to fines by the FTC by raising awareness that some apps were overcollecting private smartphone data.

- Additional privacy support tools. Our team developed multiple tools for analyzing smartphone app privacy, as well as tools to help developers with privacy. For example, Matcha is an IDE plugin for Android Studio that uses privacy annotations to help generate Google Play Safety Labels.

Supported by: This was funded by National Science Foundation, DARPA, Google, Alfred P. Sloan Foundation, Army Research Office and NQ Mobile.

Timing: 2012 - 2024

Related work:

- See Android Privacy Interfaces and PrivacyGrade.org for more information

- “Hong Leads Effort to Give Apps Privacy Grade"

Researchers: Jason Hong and team

Research Area: Usable Privacy and Security

Looking at Additional HCII Impacts...

NoRILLA Interactive Mixed-Reality Science for Kids

Our novel, mixed-reality intelligent science stations bridge the physical and virtual worlds. Millions of children and families across the US are learning more science and improving their critical thinking skills after predicting, observing and explaining experiments with our patented system at their schools and museums.

Protecting Millions of People from Phishing Scams

We studied the social aspects of phishing attacks and protected millions of people through education and training. This work led to new educational methods to raise awareness, effective anti-phishing warnings, and algorithmic detection of phishing attacks.

Tiramisu Supported Public Transit Riders and Research

Years before the public transit buses in Pittsburgh had GPS location trackers, we were exploring how riders could be the co-creators of mobile transit info. During this 10 year research project, this app made it easier for travelers with disabilities to engage in opportunistic travel and benefited 75,000 unique transit riders.

Developing Novel Interfaces for Livestreamed Gaming

Livestream gaming viewers are limited in the ways they can participate, but this team found that designing a better experience for livestreams has a variety of benefits for audiences worldwide.

Creating Accessible Visualizations with Chartability

The Chartabilty framework has empowered people around the world to recognize the parts of a data visualization that produce barriers for people with disabilities. It has contributed to dozens of design languages, visualization guides, accessibility curriculum at universities, and the data journalism seen on news websites.

TapSense Improved Touch Detection on Millions of Smartphones

We trained smartphones to reliably detect four different touchscreen inputs – for example, a tap by a finger pad as compared to a knuckle – which created opportunities for new interactions and features.

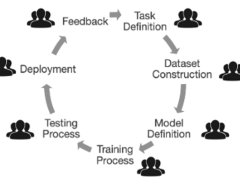

Supporting Developers So They Can Build More Responsible AI

We conducted the first studies with industry practitioners to understand the challenges they face when building responsible AI systems. Our tools and methods are now supporting developers around the world.

Grading Your Smartphone Apps on Their Privacy Practices

We created a model that analyzed over 1 million smartphone apps on their privacy and data collection practices, and then assigned them a public-facing privacy grade. This easy-to-follow grading system raised public awareness and led to improved privacy practices from several app developers.

Improved Math Mastery with the Decimal Point Game

We explored the learning analytics behind our digital learning game to see how student learning responds to curriculum changes to the game. Over the past 10 years, over 1,500 students have benefited from the Decimal Point game and curriculum materials.

Understanding the Impacts of AI in Child Welfare

We conducted the first field studies to understand how child welfare workers incorporate AI-based recommendations into their decision-making, which had far-reaching policy impacts.

Designing Teacher-AI Collaboration Systems

Hundreds of elementary and middle school students across the US have learned more as a result of our work on real-time, teacher-AI collaboration systems in K-12 classrooms.

Millions Learned More Math with Our AI Tutor

Millions of middle school and high school students across the US have learned more math as a result of our decades of work with AI-based cognitive tutoring systems.

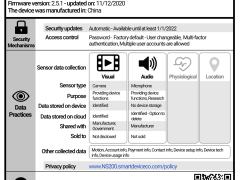

Human-Centered Privacy for the Internet of Things

As more gadgets become “smart” things and the Internet of Things (IoT) expands to tens of billions of connected devices, we want consumers to be aware of what their devices are doing with their personal data.

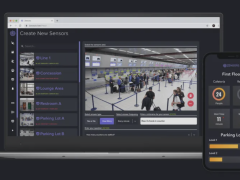

Zensors Turns Cameras Into Smart Sensors

In one application installed at the Pittsburgh International Airport in 2019, Zensors use existing cameras as powerful general-purpose sensors to provide wait time estimates for the security line, which benefits almost 10M PIT travelers per year.

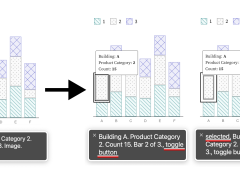

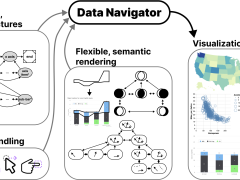

Accessible Visualization Tooling with Data Navigator

A visual labeled as “an image of a bar chart” or that requires a mouse hover to display important info does not support all users. We created the Data Navigator system to give developers a flexible foundation for designing and building more inclusive data experiences.