Understanding the Impacts of AI in Child Welfare

Child welfare workers across the United States rely on artificial intelligence (AI)-based recommendations to guide their decision making, but without a clear understanding of the potential bias in the software. Our research shed light on how AI actually impacts workers’ decision-making and had far-reaching policy impacts.

We conducted the first field studies to understand how child welfare workers incorporate AI-based recommendations into their decision-making, which led at least one government agency to discontinue use of a similar AI tool.

This work led to...

- The State of Oregon’s decision to discontinue use of a similar AI tool. Findings from this research directly informed the State of Oregon’s decision to discontinue use of an AI tool that was modeled after the deployment studied in this work.

- A Department of Justice investigation into the use of algorithms in child welfare decision-making in the US.

- Through our team’s discussions with the American Civil Liberties Union (ACLU), this work informed ACLU policy reports on the use of algorithms in child welfare decision-making in the US.

- Through our team’s discussions with the White House Office of Science and Technology Policy (OSTP), this work helped to inform recommendations in the Blueprint for AI Bill of Rights and the AI Executive Order.

- Informed recommendations in the Center for Democracy and Technology report on “Fostering responsible tech use: Balancing the benefits and risks among public child welfare agencies.”

- This work informed recommendations in the US Office of Human Services Policy’s report on “Avoiding racial bias in child welfare agencies’ use of predictive risk modeling.”

- The development of a Day One policy memo on the need to develop a federal center of excellence for state and local artificial intelligence (AI) procurement and use.

Supported by: The National Science Foundation (NSF) & the CMU Block Center for Technology and Society

Timing: This research started in 2020 and is ongoing.

Related work:

- "How child welfare workers reduce racial disparities in algorithmic decisions"

- "Improving human-AI partnerships in child welfare: Understanding worker practices, challenges, and desires for algorithmic decision support"

- “'Why do I care what’s similar?' Probing challenges in AI-assisted child welfare decision-making through worker-AI interface design concepts"

Researchers: Hao-Fei Cheng, Yanghuidi Cheng, Ken Holstein, Anna Kawakami, Adam Perer, Venkatesh Sivaraman, Logan Stapleton, Diana Qing, Zhiwei Steven Wu, Haiyi Zhu

Research Areas: Responsible Computing, Ethics and Policy, Human-Centered AI

Looking at Additional HCII Impacts...

NoRILLA Interactive Mixed-Reality Science for Kids

Our novel, mixed-reality intelligent science stations bridge the physical and virtual worlds. Millions of children and families across the US are learning more science and improving their critical thinking skills after predicting, observing and explaining experiments with our patented system at their schools and museums.

Protecting Millions of People from Phishing Scams

We studied the social aspects of phishing attacks and protected millions of people through education and training. This work led to new educational methods to raise awareness, effective anti-phishing warnings, and algorithmic detection of phishing attacks.

Tiramisu Supported Public Transit Riders and Research

Years before the public transit buses in Pittsburgh had GPS location trackers, we were exploring how riders could be the co-creators of mobile transit info. During this 10 year research project, this app made it easier for travelers with disabilities to engage in opportunistic travel and benefited 75,000 unique transit riders.

Developing Novel Interfaces for Livestreamed Gaming

Livestream gaming viewers are limited in the ways they can participate, but this team found that designing a better experience for livestreams has a variety of benefits for audiences worldwide.

Creating Accessible Visualizations with Chartability

The Chartabilty framework has empowered people around the world to recognize the parts of a data visualization that produce barriers for people with disabilities. It has contributed to dozens of design languages, visualization guides, accessibility curriculum at universities, and the data journalism seen on news websites.

TapSense Improved Touch Detection on Millions of Smartphones

We trained smartphones to reliably detect four different touchscreen inputs – for example, a tap by a finger pad as compared to a knuckle – which created opportunities for new interactions and features.

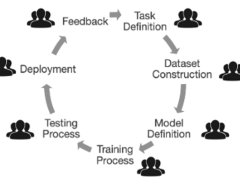

Supporting Developers So They Can Build More Responsible AI

We conducted the first studies with industry practitioners to understand the challenges they face when building responsible AI systems. Our tools and methods are now supporting developers around the world.

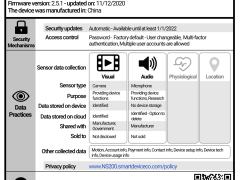

Grading Your Smartphone Apps on Their Privacy Practices

We created a model that analyzed over 1 million smartphone apps on their privacy and data collection practices, and then assigned them a public-facing privacy grade. This easy-to-follow grading system raised public awareness and led to improved privacy practices from several app developers.

Improved Math Mastery with the Decimal Point Game

We explored the learning analytics behind our digital learning game to see how student learning responds to curriculum changes to the game. Over the past 10 years, over 1,500 students have benefited from the Decimal Point game and curriculum materials.

Understanding the Impacts of AI in Child Welfare

We conducted the first field studies to understand how child welfare workers incorporate AI-based recommendations into their decision-making, which had far-reaching policy impacts.

Designing Teacher-AI Collaboration Systems

Hundreds of elementary and middle school students across the US have learned more as a result of our work on real-time, teacher-AI collaboration systems in K-12 classrooms.

Millions Learned More Math with Our AI Tutor

Millions of middle school and high school students across the US have learned more math as a result of our decades of work with AI-based cognitive tutoring systems.

Human-Centered Privacy for the Internet of Things

As more gadgets become “smart” things and the Internet of Things (IoT) expands to tens of billions of connected devices, we want consumers to be aware of what their devices are doing with their personal data.

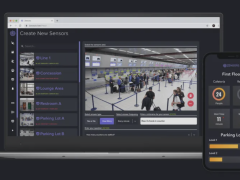

Zensors Turns Cameras Into Smart Sensors

In one application installed at the Pittsburgh International Airport in 2019, Zensors use existing cameras as powerful general-purpose sensors to provide wait time estimates for the security line, which benefits almost 10M PIT travelers per year.

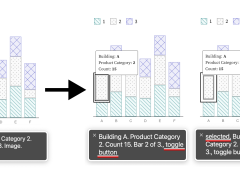

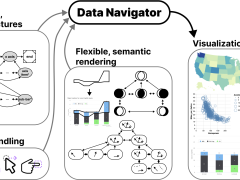

Accessible Visualization Tooling with Data Navigator

A visual labeled as “an image of a bar chart” or that requires a mouse hover to display important info does not support all users. We created the Data Navigator system to give developers a flexible foundation for designing and building more inclusive data experiences.