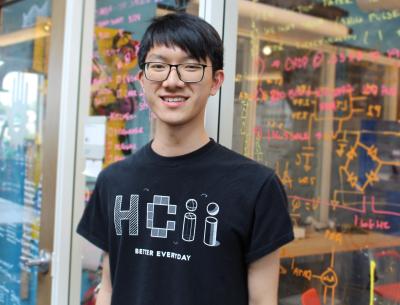

Wu Receives National Science Foundation Graduate Research Fellowship

Jason Wu, first year human-computer interaction Ph.D. student, received a 2019 Graduate Research Fellowship from the National Science Foundation.

The NSF Graduate Research Fellowship Program recognizes outstanding graduate students pursuing research in science, technology, engineering, and mathematics (STEM) disciplines, and supports individuals who have demonstrated potential to be high achieving scientists early in their careers.

This competitive fellowship is worth a total of $138,000 over three years, including a $34,000 stipend and a $12,000 cost-of-education allowance annually. In 2017, the GRFP -- the oldest graduate fellowship of its kind -- had an acceptance rate of about 16%.

Wu is researching methods to make speech interfaces, such as virtual assistants like Siri and Alexa, more accessible to deaf and hard of hearing (DHH) users. His research combines novel sensing hardware and gesture recognition algorithms to improve DHH users’ experience with speech-based virtual assistants.

The popularity of speech-based virtual assistants is on the rise, but not all DHH users are able to utilize them. For example, some DHH people cannot speak at all, and the speech of those who can is often not understood by the automatic speech recognition (ASR) models used to train these devices.

Wu’s research interests and ambitions are largely driven by the people he gets to work with.

“When I was at Google AI the past summer, one of my mentors, who was DHH, was an inspiration to me in the ways that he continuously invented and improved technologies to both serve his own needs and to the needs of the DHH community. My mission is to support all users of technology by creating intelligent and accessible systems,” Wu said.

Wu hopes the benefits of his current accessibility work will go beyond their current application of the DHH user experience.

“I find that the goals and impact of research can change over time to enable additional, often unforeseen benefits. So while my current aim is to build technologies to allow DHH users to access speech devices, I hope my research can positively affect a larger group of users, such as those with foreign accents, language learners, and others in need of accessible technology,” said Wu.

His NSF proposal, "Enabling Deaf and Hard of Hearing Users to Use Speech Controlled Devices," outlines three methods to improve DHH users’ interactions with speech-based interfaces: improving pronunciation recognition, exploring non-acoustic speech sensing and adding a speech relay system to improve the usability of the audio interfaces.

Wu first plans to explore acoustic methods to improve pronunciation by the deaf and hard of hearing speakers. DHH speakers have difficulty modulating their voices, which can lead to high variability in pronunciation. Since these acoustic qualities differ from the speech used to create the speech to text models in these devices, this creates a communication barrier with the virtual assistant. Using speech transcription systems to improve pronunciation and diction for the DHH is a largely unexplored area of work. He is also working on methods to improve conversational interactions with virtual assistants so that they are more tolerant to errors, speech disfluencies, and provide accessible output for DHH users.

He will also explore non-acoustic methods for sensing human speech (sometimes called silent speech) so that DHH users' accented speech can be better understood by computers. Related research from Wu’s internship with Google AI was recently published at the 2019 Augmented Human International Conference. “TongueBoard: An Oral Interface for Subtle Input,” used sensors on the roof of the mouth to decode silent speech.

Wu plans to present another voice user interfaces paper, "ScratchThat: Supporting Command-Agnostic Speech Repair in Voice-Driven Assistants," at the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp). This research introduces a system that allows users to modify their statements to a voice-based virtual assistant just like they could in a normal conversation. The paper showcases a generalizable system that allows the voice agents to understand “speech repair” disfluencies -- a common conversational phenomenon where users correct their statements using phrases such as “actually” or “I mean.” This paper was also accepted to the 2019 ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (ACM IMWUT).

Wu thanks his undergraduate advisors, as well as current Ph.D. advisor, Jeff Bigham, for their support and guidance. A 2018 computer science graduate of Georgia Institute of Technology, Wu researched wearable computing with their GT Ubicomp (Ubiquitous Computing) Lab and Contextual Computing Group.

Congratulations, Jason!