CHI2015: Apparition Harnesses the Crowd To Create Real-Time Prototyping

When designing anything — a new video game or the next-big-thing smartphone app — prototyping is key. It allows designers to quickly iterate and gather feedback, but the time it takes to create and update the prototype can be cripplingly slow. In an ideal world, prototypes would miraculously update themselves in the time it takes the designer to describe their ideas visually and verbally.

A pipe dream? No way.

A team of researchers from the University of Rochester, MIT, Stanford University and Carnegie Mellon University will present research findings at this week's Association for Computing Machinery’s (ACM) CHI conference on a new system they've developed that creates an interactive prototype moments after generating the idea. Their paper, "Apparition: Crowdsourced User Interfaces That Come to Life as You Sketch Them," received one of the conferences Honorable Mention awards.

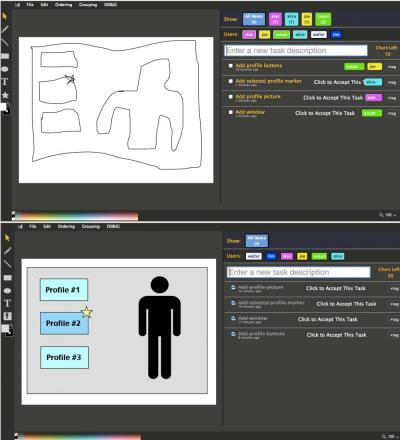

According to the paper's authors, who include HCII Associate Professor Jeff Bigham, Apparition makes near-instant prototyping possible by using human computation to handle tasks that automated systems can't do alone. Users can sketch and speak on a shared canvas. If Apparition's sketch recognition algorithm recognizes a user interface element, it replaces that element with higher-fidelity representations. It passes all other elements to paid crowd workers who coordinate to transform each sketch into the desired prototype element.

Consider this scenario: A developer at a small startup is working on a videogame and wants to create a new character. She and her colleague talk about it, but don't agree on how the character will fit into the game. The developer begins to sketch her ideas in Apparition and talk with the colleague about the new character. Almost immediately, she's sketched a rough version of the game with the new character that reflects what they've discussed. They talk in more detail and the characters talk and act as the developers have described.

This sort of instantaneous updating seems like magic, but really results from what's going on behind the scenes. Apparition captures data through both spoken language and what's sketched on the screen. These data captures are turned into a list of microtasks that crowdsourced employees, like ones recruited at Amazon Mechnical Turk, can immediately access and begin to complete. To eliminate duplicated efforts, the program allows workers to drop an "in-progress" marker on the canvas that alerts other workers to their efforts. The developer can also set the prototype to "bake in," which allows crowd workers to further refine the interface for the length of time the developer specifies.

The result? Designers can go from the sketch to a more refined interface design in an average of eight seconds. Workers responded to tasks in as little as three seconds. "Once created, the prototype quickly reacts to user input," the researchers wrote. "The result: a user could step up to a tablet, sketch a game of Super Mario Brothers, and start playing within seconds."

Bigham's collaborators included Walter Lasecki at the University of Rochester, (now a visiting researcher at CMU), Juho Kim at MIT, independent researcher Nicholas Rafter, and Onkur Sen and Michael Bernstein from Stanford. Read their whole paper here, or learn more about CHI2015 here.